Transformers is an structure of machine studying fashions that makes use of the eye mechanism to course of knowledge. Many fashions are based mostly on this structure, like GPT, BERT, T5, and Llama. Quite a lot of these fashions are related to one another. When you can construct your individual fashions in Python utilizing PyTorch or TensorFlow, Hugging Face launched a library that makes it simpler to create these fashions and supplies many pre-trained fashions you should use. The title of the library is uninteresting, simply transformers. On this article, you’ll discover ways to use this library.

Let’s get began.

A Mild Introduction to Transformers Library

Picture by sdl sanjaya. Some rights reserved.

What’s the transformers library?

The transformers library is a Python library that gives a unified interface for working with completely different transformer fashions. Not exhaustively, nevertheless it outlined many well-known open-source fashions, like GPT, BERT, T5, and Llama. Whereas it’s not the official library for a lot of of those fashions, the architectures are the identical. The fantastic thing about this library is that it unified the interface for various fashions. For instance, you already know that BERT and T5 can each generate textual content; you don’t have to know the architectural variations between the 2 however nonetheless use them through the identical operate name.

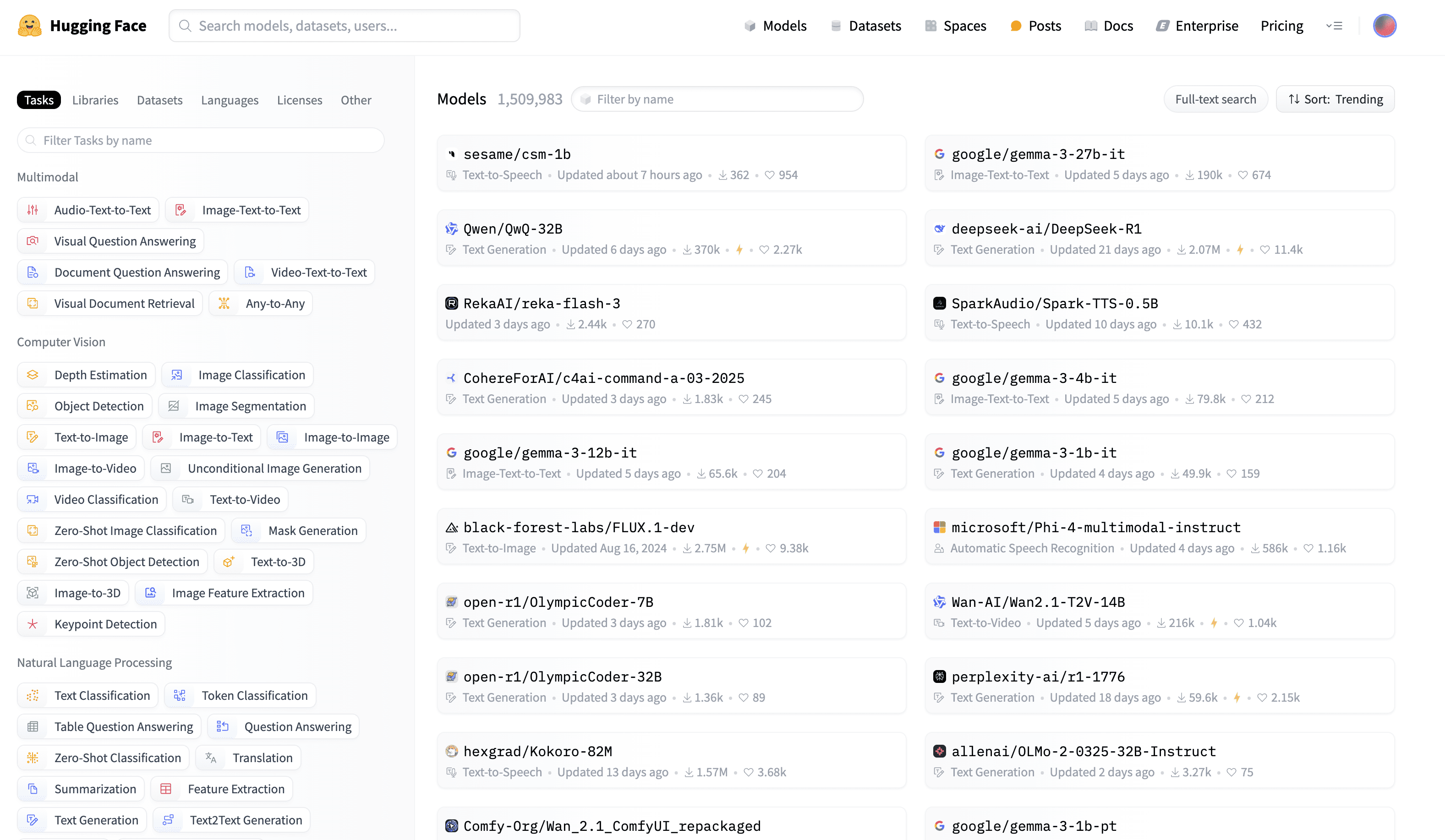

Hugging Face Hub is a repository of assets for machine studying, together with the pre-trained fashions. As a person of the fashions, you’ll be able to obtain and use them in your initiatives with out realizing a lot concerning the mechanisms behind them. If you wish to use a mannequin, reminiscent of GPT, you’ll be able to merely discover the title of the mannequin within the hub and use it within the transformers library. The library will obtain the mannequin, determine what structure it’s utilizing, then create the mannequin and cargo the weights for you, multi functional line of code.

Hugging Face Hub

The transformers library makes it straightforward to make use of the transformer fashions in your initiatives with out requiring you to turn into an professional in these machine studying fashions.

Set up

The set up is simple. You’ll be able to set up the library utilizing pip:

It will set up the library and all of the dependencies. The supported fashions are often constructed with three frameworks: PyTorch, TensorFlow, and JAX/Flax. You’ll be able to determine to make use of one among them when calling the library. A lot of the fashions default to PyTorch.

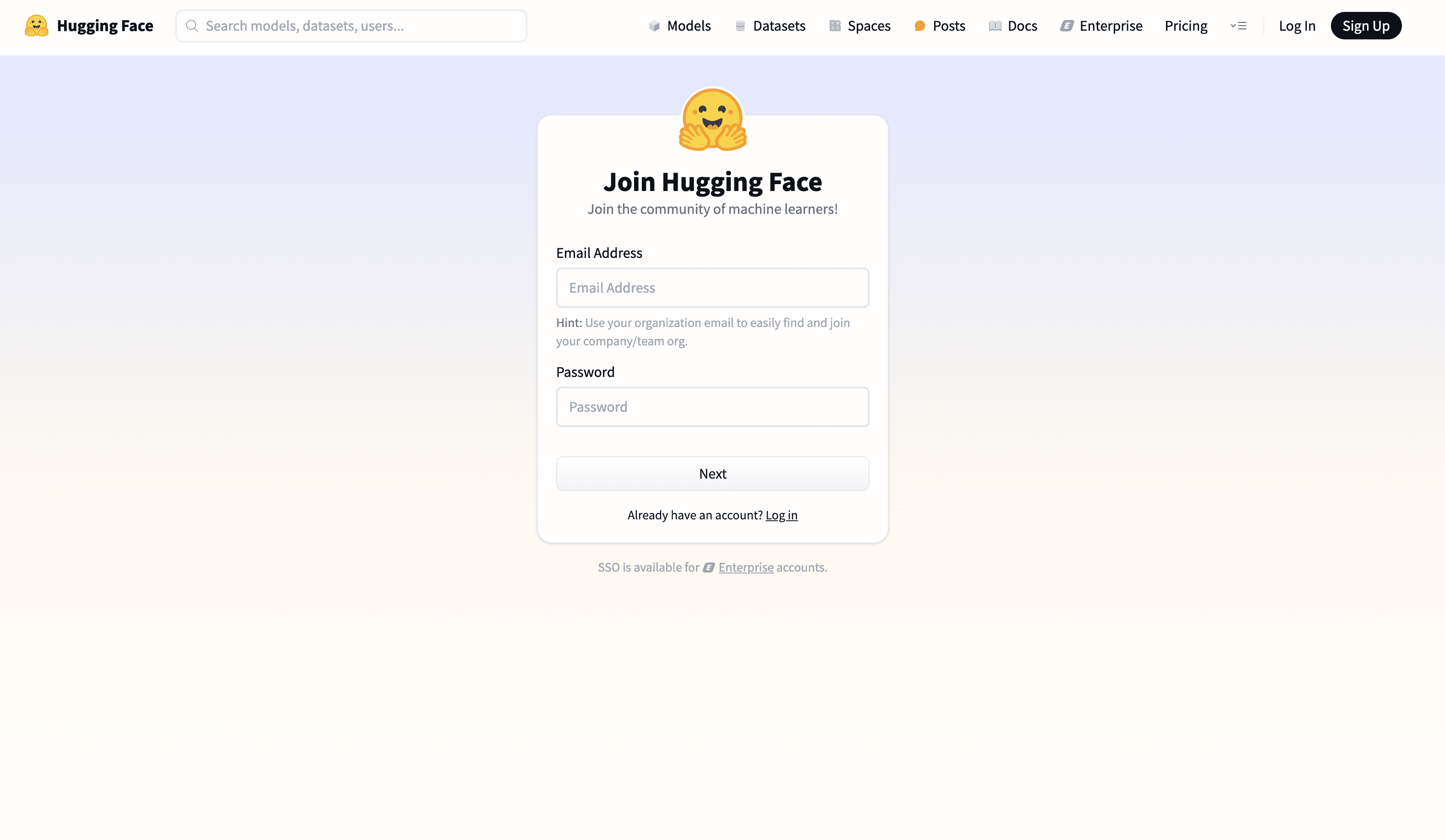

This is sufficient to begin a mission, reminiscent of creating and coaching a brand new mannequin by yourself knowledge. Nonetheless, a number of the pre-trained fashions on Hugging Face Hub would require you to have a Hugging Face account. You’ll be able to join one free of charge. Furthermore, some fashions are “gated,” that means it is advisable to request entry to make use of them. Utilizing these fashions from the transformers library would require you to authenticate your self with an entry token.

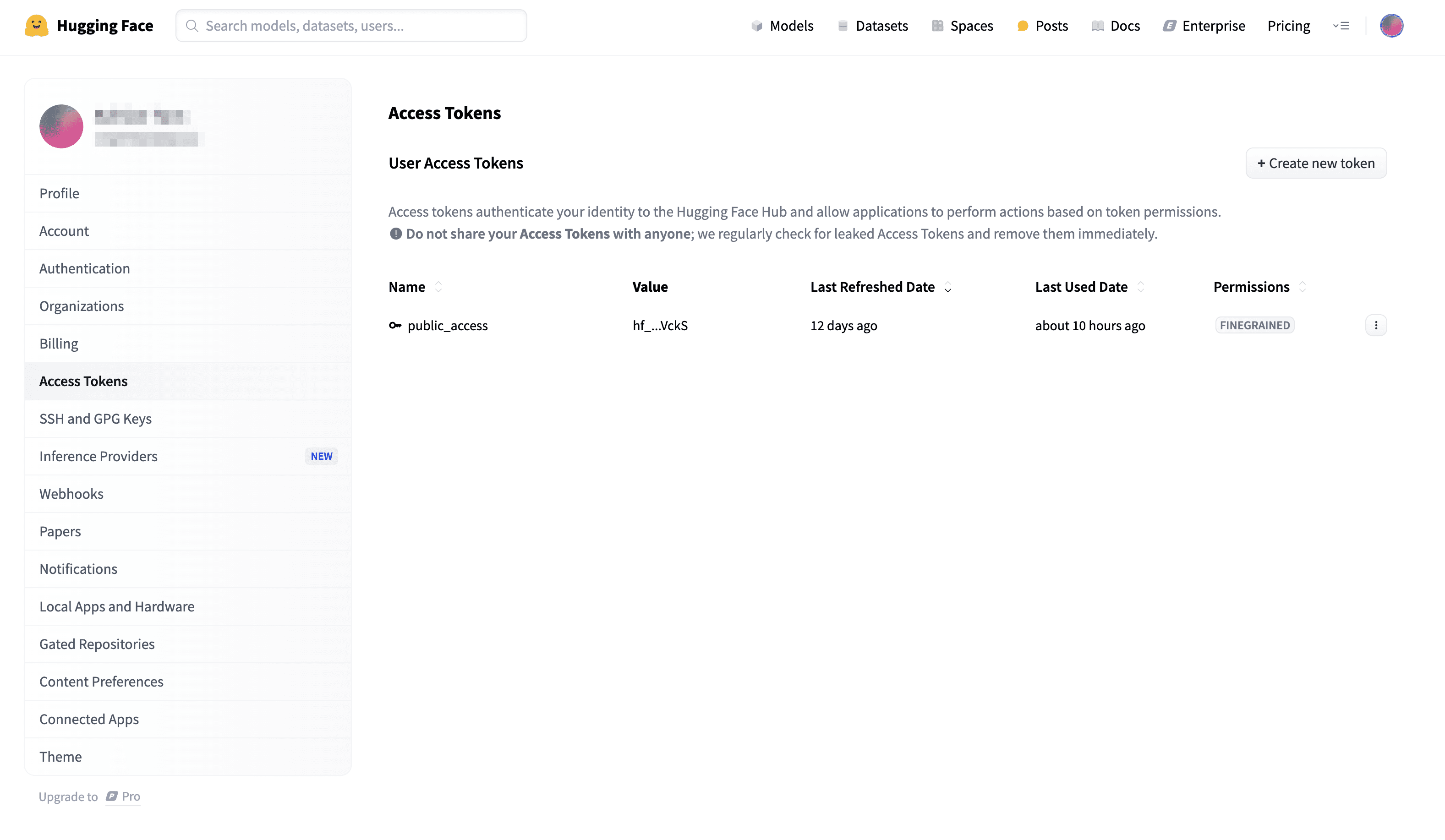

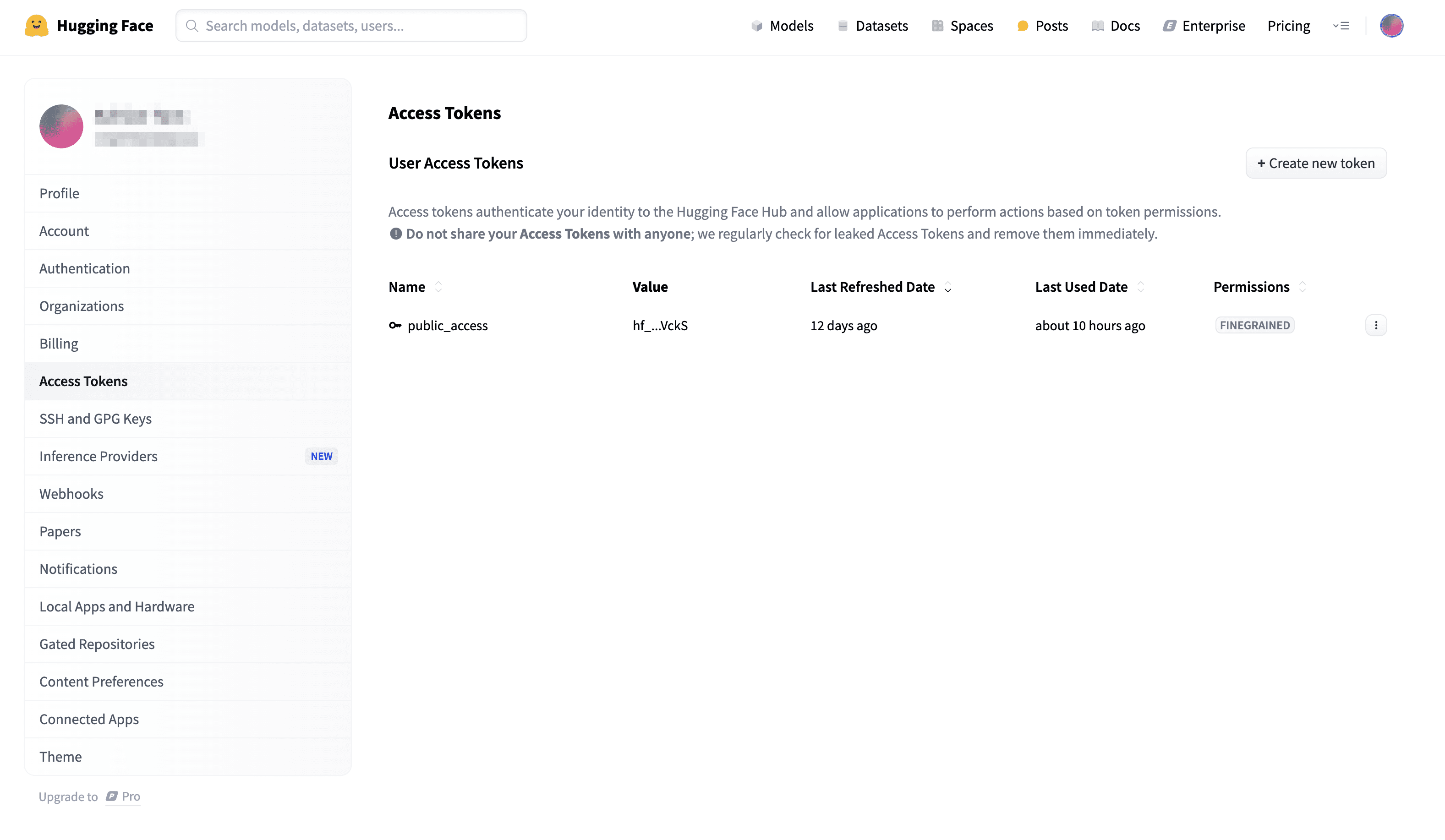

To join a Hugging Face account, go to the web site https://huggingface.co/join. After you have an account, you’ll be able to create an entry token. You are able to do this by going to the access tokens page. After you have the token, it’s best to bear in mind it, as it’s displayed solely as soon as while you create the token.

Signing up for an account

Creating an entry token to make use of with the transformers library

Utilizing the library

The library may be very straightforward to make use of. Let’s see easy methods to use it to load a pre-trained mannequin.

|

import torch from transformers import AutoTokenizer, AutoModelForCausalLM

model_id = “bert-base-uncased” tokenizer = AutoTokenizer.from_pretrained(model_id) mannequin = AutoModelForCausalLM.from_pretrained(model_id) input_ids = tokenizer(“Whats up, world!”, return_tensors=“pt”) with torch.no_grad(): outputs = mannequin(**input_ids) output_tokens = outputs.logits.argmax(dim=–1) output_text = tokenizer.decode(output_tokens[0], skip_special_tokens=True) print(output_text) |

This isn’t the shortest code to make use of the transformers library, nevertheless it reveals the primary concept. It created a tokenizer that converts the enter textual content into integer tokens, then created a mannequin to course of these tokens and return the output.

All pre-trained fashions are recognized by a mannequin ID. Whenever you create a tokenizer {that a} pre-trained mannequin requires, it is going to examine with the pre-trained mannequin’s config to instantiate the proper tokenizer object, equally, for the mannequin. Subsequently, you simply want to make use of AutoTokenizer and AutoModel as a substitute of the precise lessons, reminiscent of BertTokenizer and BertModel. Realizing how a transformer mannequin often works, it’s best to count on the core mannequin to take the enter tokens and output logit tensors. Subsequently, you used argmax above to transform the logits to token IDs and convert the IDs to strings utilizing the tokenizer’s decode technique.

Nonetheless, you could present the entry token if you wish to use a gated mannequin with the above code. The best way to arrange the entry token is to make use of some surroundings variables. Yow will discover all surroundings variables that matter to the transformers library within the documentation; the most essential ones are:

HF_TOKEN: The entry token.HF_HOME: The listing to retailer the cached fashions.

It is best to both set this as surroundings variables earlier than you run your script or do it like the next:

|

import os os.environ[“HF_TOKEN”] = “hf_YourTokenHere” os.environ[“HF_HOME”] = “~/.cache/huggingface”

from transformers import AutoTokenizer, AutoModelForCausalLM

model_id = “meta-llama/Llama-3.2-1B” tokenizer = AutoTokenizer.from_pretrained(model_id) mannequin = AutoModelForCausalLM.from_pretrained(model_id) input_ids = tokenizer(“Whats up, world!”, return_tensors=“pt”) with torch.no_grad(): outputs = mannequin(**input_ids) output_tokens = outputs.logits.argmax(dim=–1) output_text = tokenizer.decode(output_tokens[0], skip_special_tokens=True) print(output_text) |

Notice that surroundings variables ought to be set to os.environ earlier than you import the transformers library. The mannequin used above, meta-llama/Llama-3.2-1B, is an instance of a gated mannequin that it is advisable to request for entry and authenticate your self with an entry token earlier than utilizing it.

Pipeline and Duties

If the pre-trained mannequin can assist you’re employed on a textual content technology process, why would you care concerning the particulars, such because the tokenizer and the logit output? Subsequently, the transformers library supplies a pipeline operate to carry out completely different duties with a pre-trained mannequin, hiding all such particulars. Beneath is an instance of utilizing the pipeline operate to carry out a sentiment evaluation process:

|

from transformers import pipeline

model_id = “distilbert-base-uncased-finetuned-sst-2-english” classifier = pipeline(“sentiment-analysis”, mannequin=model_id) consequence = classifier(“Machine Studying Mastery is a superb web site for studying machine studying.”) print(consequence) |

The pipeline operate will create the mannequin and tokenizer, load them with the pre-trained weights, and run the duty you specified. Multi functional line of code. You don’t have to care about how the completely different parts work collectively.

The primary argument to the pipeline operate is the duty title. Just a few duties are supported by the library, a few of that are associated to picture and audio processing. The commonest ones within the area of textual content processing are:

text-generation: To generate textual content in auto-complete type. That is in all probability probably the most generally used process.sentiment-analysis: Sentiment evaluation, often to foretell “optimistic” or “adverse” sentiment for a given textual content. The dutytext-classificationis identical as this process.zero-shot-classification: Zero-shot classification. That’s, present a sentence and a set of labels to the mannequin and ask the mannequin to foretell the label that finest describes the sentence.question-answering: Query answering. This isn’t to be confused with thetext-generationprocess, which might generate solutions to open-ended questions. This generates the reply for a given query based mostly on the offered context.

You’ll be able to launch a pipeline with simply the duty title. All duties can have a default mannequin. In case you favor to make use of a distinct mannequin, you’ll be able to specify the mannequin ID, as within the instance above.

In case you’re , you’ll be able to examine the pipeline object to see what sort of fashions it’s utilizing. For instance, the classifier object above makes use of a DistilBertForSequenceClassification mannequin. You’ll be able to examine it utilizing the next:

|

... print(classifier.mannequin) print(classifier.tokenizer) |

The output can be:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 |

DistilBertForSequenceClassification( (distilbert): DistilBertModel( (embeddings): Embeddings( (word_embeddings): Embedding(30522, 768, padding_idx=0) (position_embeddings): Embedding(512, 768) (LayerNorm): LayerNorm((768,), eps=1e-12, elementwise_affine=True) (dropout): Dropout(p=0.1, inplace=False) ) (transformer): Transformer( (layer): ModuleList( (0-5): 6 x TransformerBlock( (consideration): DistilBertSdpaAttention( (dropout): Dropout(p=0.1, inplace=False) (q_lin): Linear(in_features=768, out_features=768, bias=True) (k_lin): Linear(in_features=768, out_features=768, bias=True) (v_lin): Linear(in_features=768, out_features=768, bias=True) (out_lin): Linear(in_features=768, out_features=768, bias=True) ) (sa_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True) (ffn): FFN( (dropout): Dropout(p=0.1, inplace=False) (lin1): Linear(in_features=768, out_features=3072, bias=True) (lin2): Linear(in_features=3072, out_features=768, bias=True) (activation): GELUActivation() ) (output_layer_norm): LayerNorm((768,), eps=1e-12, elementwise_affine=True) ) ) ) ) (pre_classifier): Linear(in_features=768, out_features=768, bias=True) (classifier): Linear(in_features=768, out_features=2, bias=True) (dropout): Dropout(p=0.2, inplace=False) ) DistilBertTokenizerFast(name_or_path=”distilbert-base-uncased-finetuned-sst-2-english”, vocab_size=30522, model_max_length=512, is_fast=True, padding_side=”proper”, truncation_side=”proper”, special_tokens={‘unk_token’: ‘[UNK]’, ‘sep_token’: ‘[SEP]’, ‘pad_token’: ‘[PAD]’, ‘cls_token’: ‘[CLS]’, ‘mask_token’: ‘[MASK]’}, clean_up_tokenization_spaces=True, added_tokens_decoder={ 0: AddedToken(“[PAD]”, rstrip=False, lstrip=False, single_word=False, normalized=False, particular=True), 100: AddedToken(“[UNK]”, rstrip=False, lstrip=False, single_word=False, normalized=False, particular=True), 101: AddedToken(“[CLS]”, rstrip=False, lstrip=False, single_word=False, normalized=False, particular=True), 102: AddedToken(“[SEP]”, rstrip=False, lstrip=False, single_word=False, normalized=False, particular=True), 103: AddedToken(“[MASK]”, rstrip=False, lstrip=False, single_word=False, normalized=False, particular=True), } ) |

Yow will discover from the above output that the mannequin is DistilBertForSequenceClassification and the tokenizer is DistilBertTokenizerFast. You’ll be able to certainly create the mannequin and tokenizer instantly utilizing the code beneath:

|

from transformers import DistilBertForSequenceClassification, DistilBertTokenizerFast

model_id = “distilbert-base-uncased-finetuned-sst-2-english” mannequin = DistilBertForSequenceClassification.from_pretrained(model_id) tokenizer = DistilBertTokenizerFast.from_pretrained(model_id) |

Nonetheless, you would wish to course of the enter textual content and run the mannequin your self. On this facet, the pipeline operate is a comfort.

Additional Studying

Beneath are a number of the articles that you could be discover helpful.

Abstract

On this article, you will have discovered easy methods to use the transformers library to load a pre-trained mannequin and use it to carry out a process. Specifically, you discovered:

- The best way to use the transformers library to create a mannequin and use it to carry out a process.

- Utilizing the

pipelineoperate to carry out completely different duties with a pre-trained mannequin. - The best way to examine the pipeline object to see what sort of fashions it’s utilizing.

Source link