Immediate Engineering Patterns for Profitable RAG Implementations

Picture by Creator | Ideogram

it in addition to I do: persons are relying an increasing number of on generative AI and enormous language fashions (LLM) for fast and simple info acquisition. These fashions return often-impressive generated textual content by passing a easy instruction immediate. Nevertheless, typically the outputs are inaccurate or irrelevant to the immediate. That is the place the concept for retrieval acquisition and technology strategies got here from.

RAG, or retrieval-augmented technology, is a method that makes use of exterior data to enhance an LLM’s generated outcomes. By constructing a data base that incorporates exhaustive related info for all your use circumstances, RAG can retrieve probably the most related information to supply extra context for the technology mannequin.

One essential a part of RAG is the prompting, both from inside the retrieval portion for buying the related info, or from inside the technology portion, the place we go the context to generate the textual content. That’s why it’s important to handle the prompts accurately — in order that RAG can present the most effective, most related attainable output.

This text will discover numerous immediate engineering strategies to enhance your RAG outcomes.

Retrieval Immediate

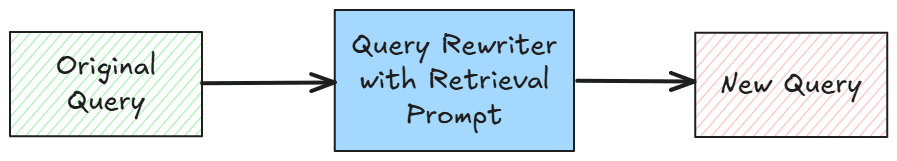

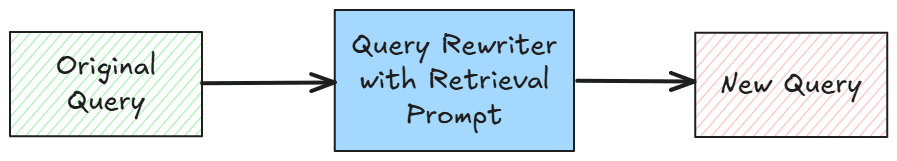

Within the RAG construction, the retrieval immediate’s position is to enhance the question for the retrieval course of. It doesn’t essentially should be current, because the retrieval immediate is often used for question enhancement, corresponding to rewriting. Typically, a easy RAG implementation will solely go the crude question to carry out retrieval from the data base. This is the reason the retrieval immediate is current: to boost the question.

Let’s begin exploring them one after the other.

Question Growth

Question growth, like its title, prompts you to develop the present question used for retrieval. The purpose is to boost the question to retrieve extra related paperwork by rewriting it with significantly better wording.

We will develop the question by asking the LLM to rewrite it to generate synonyms or add contextual key phrases utilizing area experience. Right here is an instance immediate to develop the present question.

“Broaden the question {question} into 3 search-friendly variations utilizing synonyms and associated phrases. Prioritize technical phrases from {area}.”

We will use the mannequin to develop the present question utilizing prompts particular to your use circumstances. You may even add way more element with immediate examples you realize work effectively.

Contextual Continuity

If the consumer already has a historical past utilizing the system, we are able to add the earlier historical past iteration to the present question. We will use the dialog historical past to refine the retrieval question.

The approach is to refine the question, assuming that the dialog historical past can be important for the next question. This system could be very use case-dependent, as there are numerous circumstances during which the historical past won’t be essential to the following question that the consumer inputs.

For instance, the immediate may be written as the next:

“Based mostly on the consumer’s earlier historical past question about {historical past} rewrite their new question: {new question} right into a standalone search question.”

You may tweak the question additional by including extra question construction or asking for a abstract of the earlier historical past earlier than including it to the contextual continuity immediate.

Hypothetical Doc Embeddings (HyDE)

Hypothetical doc embeddings (HyDE) is a question enhancement approach that improves the question by producing hypothetical solutions. On this approach, we ask the LLM to consider the best output from the question, and we use them to refine the output.

It’s a method that would enhance retrieval outcomes; for instance, a doc may turn into the course for the retrieval mannequin. The standard of the hypothetical doc may even rely upon the standard of the LLM.

For instance, we are able to have the next immediate:

“Write a hypothetical paragraph answering {consumer question}. Use this textual content to seek out related paperwork.”

We will use the question as it’s or add the hypothetical paperwork to the question we now have refined utilizing one other approach. What’s essential is that the hypothetical paperwork generated are related to the unique question.

Technology Immediate

RAG consists of retrieval and technology elements. The technology side is the place the retrieved context is handed into the LLM to reply the question. Many instances, the immediate we now have for the technology half is so easy that even when we now have the related chunk paperwork, the output generated just isn’t sound. We’d like a correct technology immediate to enhance the RAG output.

I’ve beforehand written extra about immediate engineering strategies, which you’ll learn within the following article. Nevertheless, for now, let’s discover additional prompts which might be helpful for the technology a part of the RAG system.

Specific Retrieval Constraints

RAG is all about passing the retrieved context into the technology immediate. We wish the LLM to reply the question based on the paperwork handed into the mannequin. Nevertheless, most of the LLM solutions are defined exterior of the context given. That’s why we may pressure the LLM to reply solely based mostly on the retrieved paperwork.

For instance, here’s a question that explicitly forces the mannequin solely to reply by the paperwork.

“Reply utilizing ONLY utilizing the offered doc sources: {paperwork}. If the reply isn’t there, say ‘I don’t know.’ Don’t use prior data.”

Utilizing the immediate above, we are able to get rid of any hallucination from the generator mannequin. We restrict the inherent data and use solely the context data.

Chain of Thought (CoT) Reasoning

CoT is a reasoning immediate engineering approach that ensures the mannequin breaks down the complicated drawback earlier than reaching the output. It encourages the mannequin to make use of intermediate reasoning steps to boost the ultimate outcome.

With the RAG system, the CoT approach will assist present a extra structured clarification and justify the response based mostly on the retrieved context. It will make the outcome way more coherent and correct.

For instance, we are able to use the next question for CoT within the technology immediate:

“Based mostly on the retrieved context: {retrieved paperwork}, reply the query {question} step-by-step, first figuring out key details, then reasoning by the reply.”

Attempt to use the immediate above to enhance the RAG output, primarily if the use case features a complicated question-answering course of.

Extractive Answering

Extractive answering is a immediate engineering approach that tries to provide output utilizing solely the related textual content from handed paperwork as an alternative of producing elaborate responses from it. On this approach, we are able to return the precise portion of the retrieved paperwork to make sure the hallucination is minimized.

The immediate instance may be seen within the instance under:

“Extract probably the most related passage from the retrieved paperwork {retrieved paperwork} that solutions the question {question}.

Return solely the precise textual content from {retrieved paperwork} with out modification.”

Utilizing the above immediate, we’ll make sure that the paperwork from the data base will not be modified. We don’t need any deviation from the doc in numerous use circumstances, corresponding to authorized or medical circumstances.

Contrastive Answering

Contrastive answering is a method that generates a response from the context given to reply the question from a number of views. It’s used to weigh totally different viewpoints and arguments the place a single perspective won’t be ample.

This system is helpful if our RAG use circumstances are in a website that requires fixed dialogue. It’s going to assist customers see totally different interpretations and encourage a extra profound exploration of the paperwork.

For instance, here’s a question to supply contrastive solutions utilizing the professionals and cons of the doc:

“Based mostly on the retrieved paperwork: {retrieved paperwork}, present a balanced evaluation of {question} by itemizing:

– Professionals (supporting arguments)

– Cons (counterarguments)

Help every level with proof from the retrieved context.”

You may at all times tweak the immediate right into a extra detailed construction and dialogue format.

Conclusion

RAG is used to enhance the output of LLMs by using exterior data. Utilizing RAG, the mannequin can improve the accuracy and relevance of the outcomes utilizing real-time information.

A primary RAG system is split into two elements: retrieval and technology. From every half, the prompts can play a job in enhancing the outcomes. This text explores a number of immediate engineering patterns that may improve profitable RAG implementations.

I hope this has helped!

Source link