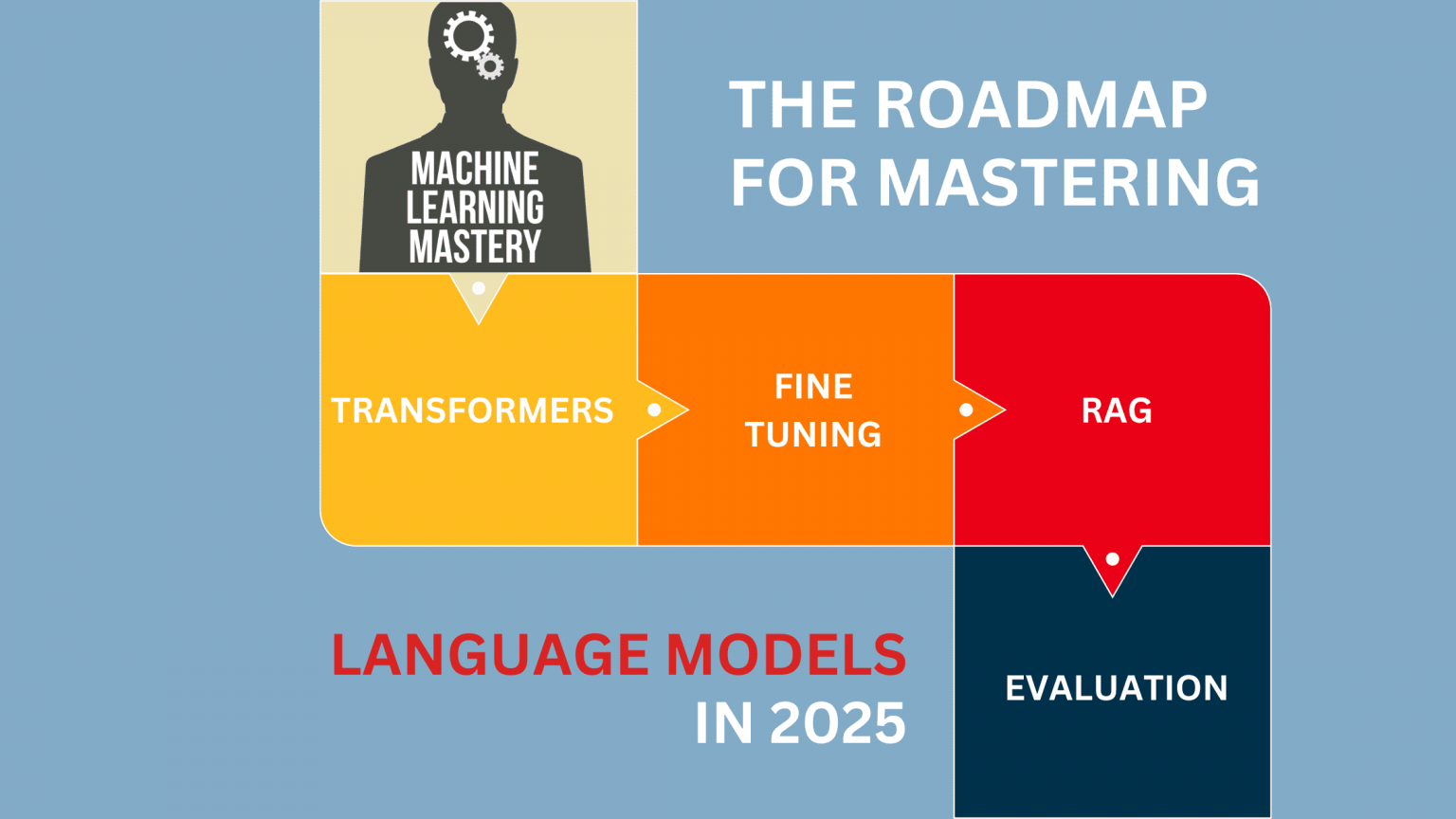

The Roadmap for Mastering Language Fashions in 2025

Picture by Editor | Midjourney

Massive language fashions (LLMs) are an enormous step ahead in synthetic intelligence. They will predict and generate textual content that sounds prefer it was written by a human. LLMs study the principles of language, like grammar and that means, which permits them to carry out many duties. They will reply questions, summarize lengthy texts, and even create tales. The rising want for routinely generated and arranged content material is driving the enlargement of the massive language mannequin market. In line with one report, Large Language Model (LLM) Market Size & Forecast:

“The worldwide LLM Market is presently witnessing sturdy progress, with estimates indicating a considerable enhance in market dimension. Projections counsel a notable enlargement in market worth, from USD 6.4 billion in 2024 to USD 36.1 billion by 2030, reflecting a considerable CAGR of 33.2% over the forecast interval”

This implies 2025 is perhaps the very best 12 months to begin studying LLMs. Studying superior ideas of LLMs features a structured, stepwise strategy that features ideas, fashions, coaching, and optimization in addition to deployment and superior retrieval strategies. This roadmap presents a step-by-step methodology to realize experience in LLMs. So, let’s get began.

Step 1: Cowl the Fundamentals

You possibly can skip this step when you already know the fundamentals of programming, machine studying, and pure language processing. Nevertheless, in case you are new to those ideas contemplate studying them from the next assets:

- Programming: That you must study the fundamentals of programming in Python, the preferred programming language for machine studying. These assets may also help you study Python:

Learn Python – Full Course for Beginners [Tutorial] – YouTube (Advisable)

Python Crash Course For Beginners – YouTube

TEXTBOOK: Learn Python The Hard Way - Machine Studying: After you study programming, you must cowl the essential ideas of machine studying earlier than shifting on with LLMs. The important thing right here is to give attention to ideas like supervised vs. unsupervised studying, regression, classification, clustering, and mannequin analysis. The most effective course I discovered to study the fundamentals of ML is:

Machine Learning Specialization by Andrew Ng | Coursera

It’s a paid course that you could purchase in case you want a certification, however happily, I’ve discovered it on YouTube free of charge too:

Machine Learning by Professor Andrew Ng - Pure Language Processing: It is rather essential to study the basic matters of NLP if you wish to study LLMs. Deal with the important thing ideas: tokenization, phrase embeddings, consideration mechanisms, and many others. I’ve given a number of assets that may assist you study NLP:

Coursera: DeepLearning.AI Natural Language Processing Specialization – Focuses on NLP methods and purposes (Advisable)

Stanford CS224n (YouTube): Natural Language Processing with Deep Learning – A complete lecture collection on NLP with deep studying.

Step 2: Perceive Core Architectures Behind Massive Language Fashions

Massive language fashions depend on numerous architectures, with transformers being essentially the most outstanding basis. Understanding these totally different architectural approaches is important for working successfully with trendy LLMs. Listed here are the important thing matters and assets to reinforce your understanding:

- Perceive transformer structure and emphasize on understanding self-attention, multi-head consideration, and positional encoding.

- Begin with Consideration Is All You Want, then discover totally different architectural variants: decoder-only fashions (GPT collection), encoder-only fashions (BERT), and encoder-decoder fashions (T5, BART).

- Use libraries like Hugging Face’s Transformers to entry and implement numerous mannequin architectures.

- Follow fine-tuning totally different architectures for particular duties like classification, era, and summarization.

Advisable Studying Assets

Step 3: Specializing in Massive Language Fashions

With the fundamentals in place, it’s time to focus particularly on LLMs. These programs are designed to deepen your understanding of their structure, moral implications, and real-world purposes:

- LLM University – Cohere (Advisable): Provides each a sequential observe for newcomers and a non-sequential, application-driven path for seasoned professionals. It gives a structured exploration of each the theoretical and sensible features of LLMs.

- Stanford CS324: Large Language Models (Advisable): A complete course exploring the idea, ethics, and hands-on follow of LLMs. You’ll learn to construct and consider LLMs.

- Maxime Labonne Guide (Advisable): This information gives a transparent roadmap for 2 profession paths: LLM Scientist and LLM Engineer. The LLM Scientist path is for many who wish to construct superior language fashions utilizing the newest methods. The LLM Engineer path focuses on creating and deploying purposes that use LLMs. It additionally consists of The LLM Engineer’s Handbook, which takes you step-by-step from designing to launching LLM-based purposes.

- Princeton COS597G: Understanding Large Language Models: A graduate-level course that covers fashions like BERT, GPT, T5, and extra. It’s Supreme for these aiming to have interaction in deep technical analysis, this course explores each the capabilities and limitations of LLMs.

- Fine Tuning LLM Models – Generative AI Course When working with LLMs, you’ll usually have to fine-tune LLMs, so contemplate studying environment friendly fine-tuning methods resembling LoRA and QLoRA, in addition to mannequin quantization methods. These approaches may also help cut back mannequin dimension and computational necessities whereas sustaining efficiency. This course will train you fine-tuning utilizing QLoRA and LoRA, in addition to Quantization utilizing LLama2, Gradient, and the Google Gemma mannequin.

- Finetune LLMs to teach them ANYTHING with Huggingface and Pytorch | Step-by-step tutorial: It gives a complete information on fine-tuning LLMs utilizing Hugging Face and PyTorch. It covers the whole course of, from knowledge preparation to mannequin coaching and analysis, enabling viewers to adapt LLMs for particular duties or domains.

Step 4: Construct, Deploy & Operationalize LLM Functions

Studying an idea theoretically is one factor; making use of it virtually is one other. The previous strengthens your understanding of basic concepts, whereas the latter lets you translate these ideas into real-world options. This part focuses on integrating giant language fashions into tasks utilizing fashionable frameworks, APIs, and greatest practices for deploying and managing LLMs in manufacturing and native environments. By mastering these instruments, you’ll effectively construct purposes, scale deployments, and implement LLMOps methods for monitoring, optimization, and upkeep.

- Software Growth: Discover ways to combine LLMs into user-facing purposes or providers.

- LangChain: LangChain is the quick and environment friendly framework for LLM tasks. Discover ways to construct purposes utilizing LangChain.

- API Integrations: Discover learn how to join numerous APIs, like OpenAI’s, so as to add superior options to your tasks.

- Native LLM Deployment: Study to arrange and run LLMs in your native machine.

- LLMOps Practices: Study the methodologies for deploying, monitoring, and sustaining LLMs in manufacturing environments.

Advisable Studying Assets & Tasks

Constructing LLM purposes:

Native LLM Deployment:

Containerizing LLM-Powered Apps: Chatbot Deployment – A step-by-step information to deploying native LLMs with Docker.

Deploying & Managing LLM purposes In Manufacturing Environments:

GitHub Repositories:

- Awesome-LLM: It’s a curated assortment of papers, frameworks, instruments, programs, tutorials, and assets centered on giant language fashions (LLMs), with a particular emphasis on ChatGPT.

- Awesome-langchain: This repository is the hub to trace initiatives and tasks associated to LangChain’s ecosystem.

Step 5: RAG & Vector Databases

Retrieval-Augmented Technology (RAG) is a hybrid strategy that mixes info retrieval with textual content era. As a substitute of relying solely on pre-trained information, RAG retrieves related paperwork from exterior sources earlier than producing responses. This improves accuracy, reduces hallucinations, and makes fashions extra helpful for knowledge-intensive duties.

- Perceive RAG & its Architectures (e.g., normal RAG, Hierarchical RAG, Hybrid RAG)

- Vector Databases – Perceive learn how to implement vector databases with RAG. Vector databases retailer and retrieve info based mostly on semantic that means slightly than precise key phrase matches. This makes them ideally suited for RAG-based purposes as these permit for quick and environment friendly retrieval of related paperwork.

- Retrieval Methods – Implement dense retrieval, sparse retrieval, and hybrid seek for higher doc matching.

- LlamaIndex & LangChain – Find out how these frameworks facilitate RAG.

- Scaling RAG for Enterprise Functions – Perceive distributed retrieval, caching, and latency optimizations for dealing with large-scale doc retrieval.

Advisable Studying Assets & Tasks

Primary Foundational programs:

Superior RAG Architectures & Implementations:

Enterprise-Grade RAG & Scaling:

Step 6: Optimize LLM Inference

Optimizing inference is essential for making LLM-powered purposes environment friendly, cost-effective, and scalable. This step focuses on methods to cut back latency, enhance response occasions, and reduce computational overhead.

Key Matters

- Mannequin Quantization: Cut back mannequin dimension and enhance velocity utilizing methods like 8-bit and 4-bit quantization (e.g., GPTQ, AWQ).

- Environment friendly Serving: Deploy fashions effectively with frameworks like vLLM, TGI (Textual content Technology Inference), and DeepSpeed.

- LoRA & QLoRA: Use parameter-efficient fine-tuning strategies to reinforce mannequin efficiency with out excessive useful resource prices.

- Batching & Caching: Optimize API calls and reminiscence utilization with batch processing and caching methods.

- On-System Inference: Run LLMs on edge units utilizing instruments like GGUF (for llama.cpp) and optimized runtimes like ONNX and TensorRT.

Advisable Studying Assets

- Efficiently Serving LLMs – Coursera – A guided venture on optimizing and deploying giant language fashions effectively for real-world purposes.

- Mastering LLM Inference Optimization: From Theory to Cost-Effective Deployment – YouTube – A tutorial discussing the challenges and options in LLM inference. It focuses on scalability, efficiency, and price administration. (Advisable)

- MIT 6.5940 Fall 2024 TinyML and Efficient Deep Learning Computing – It covers mannequin compression, quantization, and optimization methods to deploy deep studying fashions effectively on resource-constrained units. (Advisable)

- Inference Optimization Tutorial (KDD) – Making Models Run Faster – YouTube – A tutorial from the Amazon AWS staff on strategies to speed up LLM runtime efficiency.

- Large Language Model inference with ONNX Runtime (Kunal Vaishnavi) – A information on optimizing LLM inference utilizing ONNX Runtime for sooner and extra environment friendly execution.

- Run Llama 2 Locally On CPU without GPU GGUF Quantized Models Colab Notebook Demo – A step-by-step tutorial on working LLaMA 2 fashions regionally on a CPU utilizing GGUF quantization.

- Tutorial on LLM Quantization w/ QLoRA, GPTQ and Llamacpp, LLama 2 – Covers numerous quantization methods like QLoRA and GPTQ.

- Inference, Serving, PagedAtttention and vLLM – Explains inference optimization methods, together with PagedAttention and vLLM, to hurry up LLM serving.

Wrapping Up

This information covers a complete roadmap to studying and mastering LLMs in 2025. I do know it might sound overwhelming at first, however belief me — when you comply with this step-by-step strategy, you’ll cowl all the pieces very quickly. You probably have any questions or want extra assist, do remark.

Source link