For months, an nameless TikTok account hosted dozens of AI-generated movies of explosions and burning cities. The movies racked up tens of tens of millions of views and fuelled different posts that claimed, falsely, they had been actual footage of the battle in Ukraine.

After CBC Information contacted TikTok and the account proprietor for remark, it disappeared from the platform.

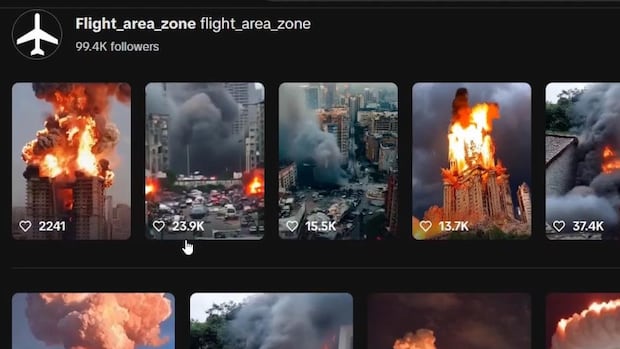

The account, flight_area_zone, had a number of movies that includes huge explosions which reached tens of millions of viewers. The movies featured hallmarks of AI technology, however lacked any disclaimer as required by TikTok guidelines. TikTok declined to remark in regards to the account.

A number of of the movies had been unfold throughout totally different social media platforms by different customers, who posted them alongside claims they depicted precise battle footage, with a number of gaining tens of hundreds of views. In these posts, some commenters seem to take the movies at face worth, and both rejoice or denounce the purported harm, leaving them with an inaccurate sense of the battle.

The rise of ‘AI slop’

The flight_area_zone account is only one instance of a broader pattern in social media content material, one thing consultants name “AI slop.”

It usually refers to content material — photos, video, textual content — created utilizing AI. It is usually poor high quality, sensational or sentimental, in ways in which appear designed to generate clicks and engagement.

AI-generated content material has change into an vital think about on-line misinformation. A preprint examine published online this year, co-authored by Google researchers, confirmed that AI-generated misinformation rapidly turned practically as in style as conventional types of manipulated media in 2023.

A TikTok account internet hosting movies of AI-generated explosions that others claimed had been in Ukraine was faraway from the platform following inquiries from the CBC Information visible investigations group. The account exhibits how low-quality content material made with generative AI — often called ‘AI slop’ — can warp perceptions and gasoline misinformation.

In October, for instance, a similar-looking video from a distinct TikTok account went viral as folks claimed it depicted an Israeli strike on Lebanon. The video, displaying raging fires in Beirut, was shared broadly throughout social media and by a number of distinguished accounts. In some instances it was packaged together with actual movies displaying fires within the Lebanese capital, additional blurring the road between pretend and actual information.

Fb has additionally seen an influx of AI-generated content material meant to create engagement — clicks, views and extra followers — which might generate income for its creators.

The flight_zone_area account additionally had a subscriber perform the place folks may pay for issues like distinctive badges or stickers.

The explosion movies had been convincing to some — commenters ceaselessly requested for particulars about the place the explosions had been, or expressed pleasure or dismay on the photos. However the movies nonetheless had a few of the telltale distortions of AI-generated content material.

Automobiles and other people on the road appear sped up or warped, and there are a number of different apparent errors — like a automobile that’s far too massive. Most of the movies additionally share equivalent audio.

The account additionally featured movies aside from burning skylines. In a single, a cathedral-like constructing burns. In one other, a rocket explodes outdoors of a bungalow.

A whole bunch of expertise and synthetic intelligence consultants are urging governments globally to take instant motion towards deepfakes — AI-generated voices, photos, and movies of individuals — which they are saying are an ongoing menace to society by way of the unfold of mis- and disinformation and will have an effect on the result of elections.

Older movies confirmed AI-generated tornadoes and planes catching on hearth — a development that means experimentation with what sort of content material can be in style and promote engagement, which could be profitable.

One downside with AI slop, in response to Aimée Morrison, an affiliate professor and skilled in social media on the College of Waterloo, is that a lot of the viewers isn’t all the time going to take a re-examination.

“We have created a tradition that is extremely depending on visuals, amongst a inhabitants that is not actually educated on how to have a look at issues,” Morrison instructed CBC Information.

A second challenge arises with photos of battle zones, the place the truth on the bottom is each vital and contested.

“It turns into simpler to dismiss the precise proof of atrocities occurring in [Ukraine] as a result of a lot AI slop content material is circulating that a few of us then change into hesitant to share or consider something, as a result of we do not wish to be referred to as out as having been taken in,” Morrison stated.

Researchers said earlier this year that AI-generated hate content material can also be on the rise.

Get the newest on CBCNews.ca, the CBC Information App, and CBC Information Community for breaking information and evaluation

Individually, UN analysis warned last year that AI may supercharge “anti-Semitic, Islamophobic, racist and xenophobic content material.” Melissa Fleming, the undersecretary-general for international communications, instructed a UN physique that generative AI allowed folks to create massive portions of convincing misinformation at a low price.

Though tips for labelling AI-generated content material are in place at TikTok and different social media platforms, moderation remains to be a problem. There’s the sheer quantity of content material produced, and the truth that machine learning itself is not always a reliable tool for mechanically detecting deceptive photos.

The flight_area_zone account now not exists, for instance, however an apparently associated (however a lot much less in style account), flight_zone_51, remains to be lively.

These limitations usually imply customers are accountable for reporting content material, or including context, reminiscent of with X’s “group notes” characteristic. Customers also can report unlabelled AI-generated content material on TikTok, and the corporate does reasonable and take away content material that violates its group tips.

Morrison says the accountability for flagging AI-generated content material is shared each by social media platforms and their customers.

“Social media websites must require digital watermarking on this stuff. They must do takedowns of stuff that is circulating as misinformation,” she stated.

“However generally, this tradition must do rather a lot higher job of coaching folks in essential wanting as a lot as in essential pondering.”

Source link